Davos’ jet-setters are tossing around a math term, Felix Salmon reports:

This year’s Davos was all about tail risk — or, more to the point, the absence thereof. The ECB’s Mario Draghi said — more than once — that he had “removed the tail risk from the euro”. His colleague Ignazio Visco went almost as far, saying that only a few tail risks remain.

[Felix lists a few more examples. . .]

And that was just the on-the-record comments: off the record, many more people, including at least one official US representative, were saying the same thing.

Felix ably explains how they don’t know what they are talking about.

[H]ere’s what none of them seem to understand: tail risks, by definition, can’t be measured. If you can look at a certain risk and determine that it has gone down, then it’s not a tail risk: it’s something else. Let’s say that last year there was a 25% chance that Greece would leave the euro: if something has a 25% chance of happening, it’s not a tail risk any more, it’s just a risk.

If you’re planning a trip to the Grand Canyon, you might think about buying travel insurance to cover yourself in the event you are seriously injured. But when you’re right up at the edge of the canyon and the ground starts slipping beneath your feet, at that point you have to actually do something to avoid injury or death. The risk has gone from being theoretical to being real — and at that point it’s not a tail risk any more, it’s a real possibility with a scarily high probability.

In fairness to the leaders of the world, they’re trying to avoid another financial catastrophe like 2008. But the global financial meltdown wasn’t really tail risk. Back then, more than a few worried that weak lending practices could trigger global calamity – including the World Economic Forum, circa 2008.

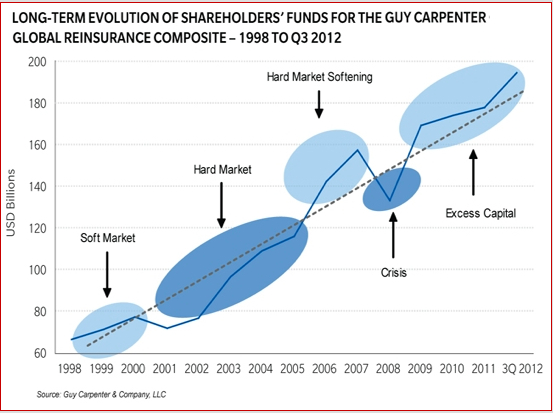

A better example of tail risk would be the 2011 New Zealand earthquakes, which occurred on fault lines no one knew existed. And it shows the futility of trying to reduce a specific tail risk. Each tail risk is so unlikely, you’d waste time and money trying to alleviate it. You just have to be aware that something you never considered is going to happen, and you need enough flexibility (read: capital) to address that thing when it happens.